You've probably seen that Auto button in Cursor and wondered what happens behind the scenes. I'll be honest—I ignored it for months. Most developers either skip it completely or accidentally enable it and panic when they can't tell which model is running their code.

But here's what I discovered: auto model selection isn't just a convenience feature—it's actually a sophisticated cost optimization system that most of us are sleeping on.

The Problem: You're Using a Sword to Cut Cake

Think about your typical coding session. You're switching between simple tasks like "add a comment to this function" and complex ones like "refactor this entire authentication system."

Using Claude 3.5 Sonnet for both is like hiring a neurosurgeon to put on a band-aid.

The brutal reality: About 80% of our coding tasks could be handled perfectly by cheaper, faster models. But we default to premium models because we're afraid of getting subpar results.

How Auto Selection Actually Works

Cursor's auto selection isn't randomly picking models—it's running a smart routing algorithm that looks at:

- Query complexity: Simple syntax fixes vs. complex architectural guidance

- Context requirements: Large codebases get routed to models with bigger context windows

- Current availability: If your preferred model is slow, it fails over to alternatives

- Performance patterns: The system learns what works best for different task types

It's surprisingly intelligent once you understand the logic.

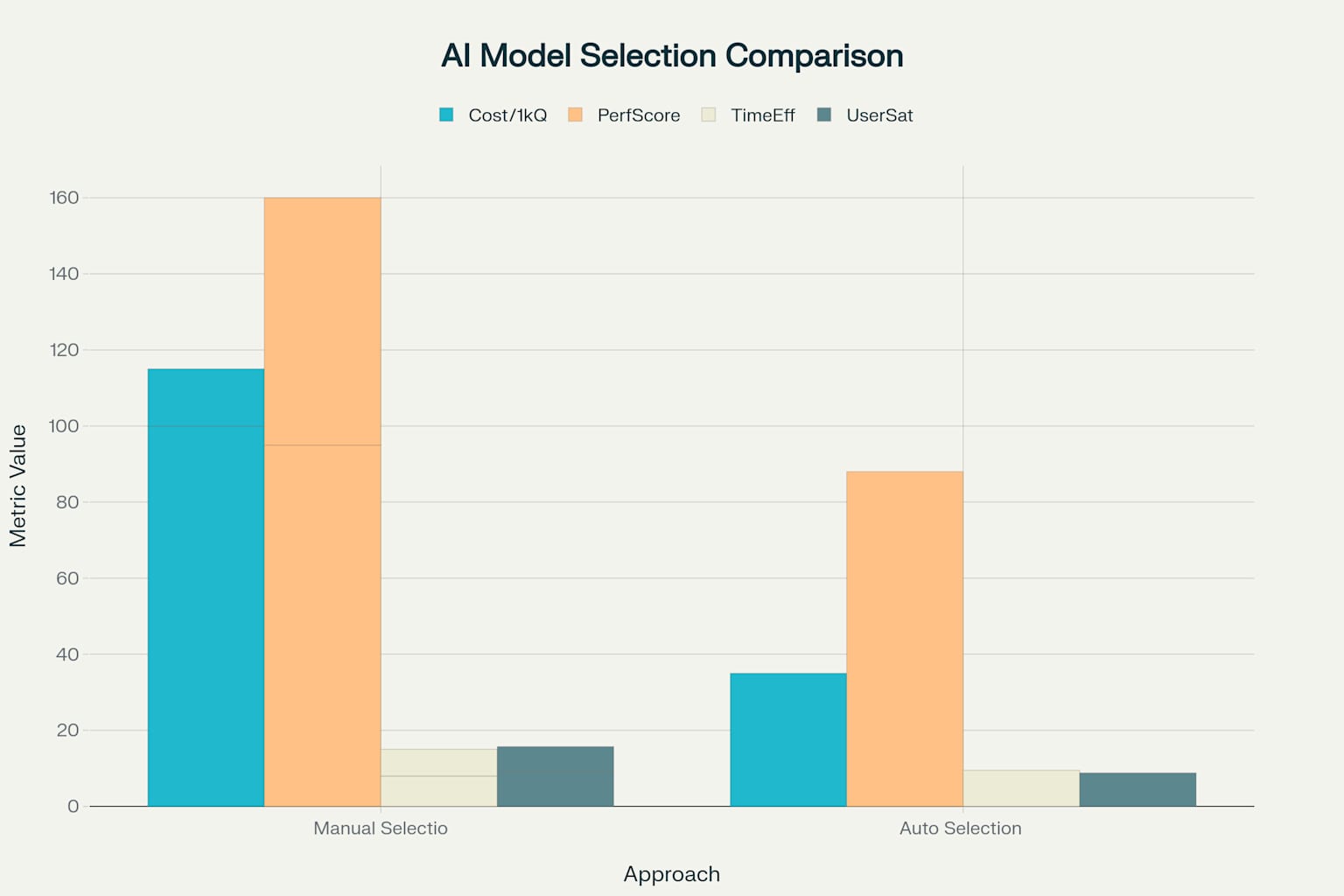

The Economics Are Real

The cost savings are genuinely impressive. I've seen cost reductions of 35-85% depending on your use case.

Simple tasks (commenting code, basic fixes, variable renaming) get routed to fast, cheap models like GPT-4o mini. Complex reasoning (architectural decisions, debugging complex logic) automatically escalates to premium models.

Pro tip: You can actually see which model auto-select chose by adding this to your cursor rules:

Always start your response by identifying which model you are (e.g., "Claude 3.5 Sonnet responding:" or "GPT-4o mini here:")

Why I Was Hesitant

The biggest complaint about auto selection? Lack of transparency. You can't easily see which model handled your request, making it feel like a black box.

But once I started using it more, I realized it's actually quite predictable. It's optimizing for the sweet spot between cost, speed, and quality.

Making It Work for You

Don't treat auto selection as set-and-forget. Here's my approach:

- Use auto selection for general development workflow

- Manually select premium models when tackling something complex

- Monitor your usage patterns to see when auto selection serves you well

Think of it like adaptive cruise control—it handles the routine stuff intelligently, but you can take manual control when needed.

The Real Value

Auto model selection isn't about being cheap—it's about being resource-efficient. Why pay premium prices for tasks that don't require premium intelligence?

The bottom line: Auto selection could cut your AI costs by 50-80% without meaningfully impacting your development experience. The question isn't whether it works—it's whether you can afford not to use it.

For most development workflows, you'll find that 90% of your requests get handled by efficient models that cost 10x less, while 10% of complex requests automatically escalate to premium models. Overall quality stays high while costs plummet.

It's one of those features that seems minor but can have a major impact on your AI budget.